“In 1950, Alan Turing introduced his groundbreaking paper ‘Computing Machinery and Intelligence,’ laying the foundation for what would later be known as the Turing Test—a benchmark for assessing machine intelligence. Just a few years later, the term ‘artificial intelligence’ was officially coined, marking the birth of a new era in technology and thought.”

Contents

- The History of Artificial Intelligence: From Ancient Ideas to Modern Algorithms

- History of Artificial Intelligence: Conceptual Foundations and Early Theories

- SmartLocal AI in depth review: Top AI Tool for Local Businesses Full Breakdown

- Add Your Heading Text Here

- History of Artificial Intelligence: AI Timeline

- Laying the Groundwork for AI (1900–1950)

- History of Artificial Intelligence: Symbolic AI and the Era of Expert Systems (1970s–1980s)

- History of Artificial Intelligence: Rise of Machine Learning and Neural Networks (1990s–2000s)

- History of Artificial Intelligence: Deep Learning and Modern AI Applications (2010s–Present)

- History of Artificial Intelligence: Pioneers and Key Figures in AI History

- History of Artificial Intelligence: Conclusion

- History of Artificial Intelligence: FAQ Section

- History of Artificial Intelligence Comparison Table: Symbolic AI vs ML vs Deep Learning

The History of Artificial Intelligence: From Ancient Ideas to Modern Algorithms

Artificial Intelligence (AI) is the simulation of human intelligence in machines designed to think, learn, and perform tasks typically requiring human cognition. These tasks include problem-solving, language understanding, image recognition, decision-making, and more. In contrast, while AI might seem like a modern marvel, its conceptual roots stretch back centuries, with philosophers and mathematicians imagining intelligent machines long before the technology existed to build them.

The formal development of AI began in the mid-20th century with groundbreaking theoretical work by pioneers like Alan Turing, followed by the 1956 Dartmouth Conference — considered the birth of AI as a scientific field. Over the decades, AI has evolved from rule-based systems and symbolic reasoning to advanced machine learning and deep learning models that power today’s smart technologies.

Understanding the history of AI provides valuable context for the rapid advancements we’re witnessing today. From voice assistants like Siri and Alexa to autonomous vehicles and large language models like ChatGPT, AI is now embedded in daily life, transforming how we work, communicate, and interact with the world. Exploring AI’s journey helps us appreciate both its milestones and its future potential.

History of Artificial Intelligence: Conceptual Foundations and Early Theories

The idea of intelligent machines has fascinated humans for millennia. In ancient Greek mythology, automatons like Talos—a giant bronze man built to protect Crete—symbolized early visions of artificial beings. Similar concepts appeared in Chinese and Arabic lore, where mechanical creatures were imagined as assistants or warriors, reflecting humanity’s enduring curiosity about creating life-like intelligence.

Philosophically, the foundation of AI draws from rationalism and debates on the nature of the mind. Thinkers like René Descartes questioned the distinction between mind and body, sparking ideas about whether human cognition could be replicated by mechanical systems. Enlightenment philosophers and later mathematicians built frameworks for logic and reasoning that would become central to AI.

In the early 20th century, mathematical logic advanced rapidly through the work of Kurt Gödel, Bertrand Russell, and Alfred North Whitehead. These developments laid the groundwork for formal systems capable of emulating reasoning—a key pillar of AI.

The turning point came in 1950, when British mathematician and logician Alan Turing published “Computing Machinery and Intelligence.” In this groundbreaking paper, Turing proposed the idea of machine intelligence and introduced the Turing Test, a benchmark for determining if a machine could exhibit behavior indistinguishable from a human. Turing also developed one of the first self-learning computer programs—a checkers-playing machine—paving the way for early machine learning concepts and reinforcing the feasibility of intelligent machines.

Add Your Heading Text Here

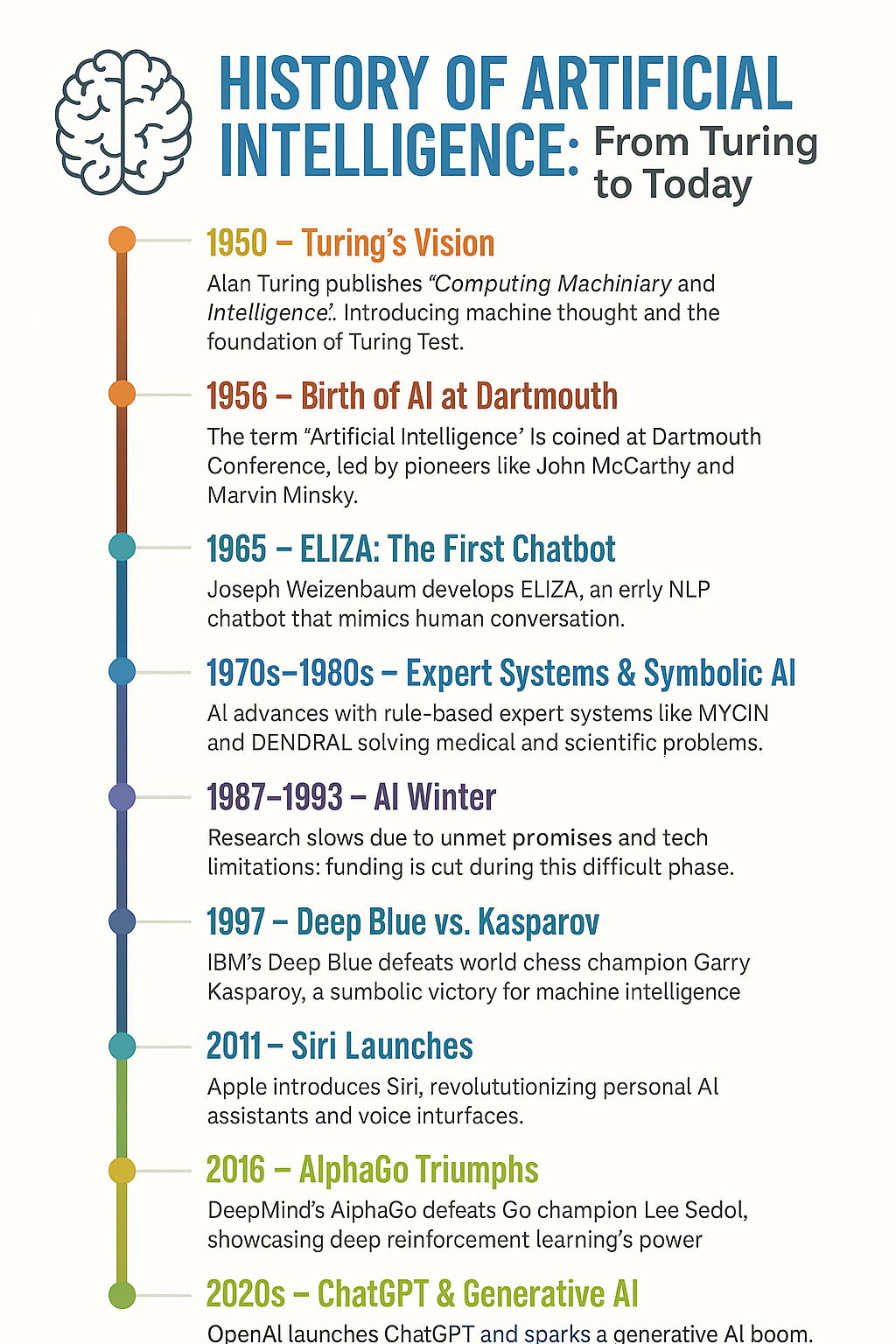

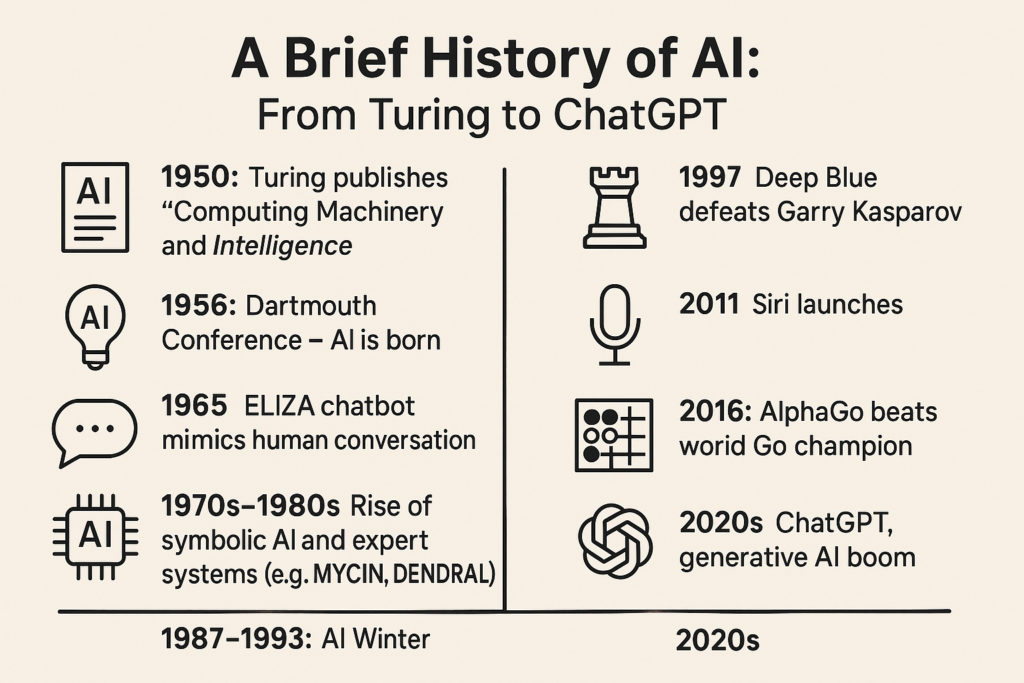

History of Artificial Intelligence: AI Timeline

“History of artificial intelligence in points, timeline“

- 1950: Turing publishes “Computing Machinery and Intelligence”

- 1956: Dartmouth Conference – AI is born

- 1965: ELIZA chatbot mimics human conversation

- 1970s–1980s: Rise of symbolic AI and expert systems (e.g., MYCIN, DENDRAL)

- 1987–1993: AI Winter

- 1997: Deep Blue defeats Garry Kasparov

- 2011: Siri launches

- 2016: AlphaGo beats world Go champion

- 2020s: ChatGPT, generative AI boom

Laying the Groundwork for AI (1900–1950)

The early 20th century sparked a fascination with the idea of artificial life. Across books, plays, and early machines, a collective curiosity began to form: Could humans build an artificial brain?

During this time, inventors and storytellers alike explored the concept of synthetic beings. While most early prototypes were mechanically simple—often steam-powered—some could mimic basic human-like behaviors, such as walking or making facial expressions.

A few key milestones from this foundational era include:

1921: Czech playwright Karel Čapek debuted his science fiction play Rossum’s Universal Robots. This play introduced the term “robot”, derived from the Czech word robota, meaning forced labor. It marked the first recorded use of the word in reference to artificial people.

1929: Japanese professor Makoto Nishimura developed Gakutensoku, considered Japan’s first robot. It was designed not just for movement but as a symbol of harmony between nature and technology.

1949: American computer scientist Edmund Callis Berkeley published Giant Brains, or Machines That Think. In it, he likened emerging computers to the human brain, setting the stage for modern discussions around machine intelligence.

This era didn’t produce true AI, but it set the intellectual and cultural stage for what would come—introducing the ideas, language, and mechanical groundwork that modern AI builds upon.

History of Artificial Intelligence: Symbolic AI and the Era of Expert Systems (1970s–1980s)

During the 1970s and 1980s, AI research was dominated by symbolic reasoning and the development of knowledge-based systems, often referred to as expert systems. These systems relied on manually encoded rules and logic to simulate decision-making processes within specific domains. The idea was to replicate the expertise of human specialists using large sets of “if-then” rules.

Two of the most prominent expert systems from this era were MYCIN and DENDRAL. Developed at Stanford University, MYCIN was designed to diagnose bacterial infections and recommend antibiotics, while DENDRAL assisted chemists in analyzing mass spectrometry data to determine molecular structures. These systems demonstrated that AI could perform as well as—or even better than—human experts in narrow, well-defined areas.

However, symbolic AI had critical limitations. These systems were brittle—they struggled with ambiguity and could not adapt beyond their pre-programmed rules. Their lack of scalability made them impractical for broader, more dynamic environments. Since all knowledge had to be entered manually, creating and maintaining these systems became labor-intensive and costly. Most importantly, they lacked true learning capabilities.

As a result of these limitations, the field experienced two major downturns, known as AI Winters. The first AI winter (1974–1980) occurred when high expectations were not met, leading governments and organizations to cut funding. The second AI winter (1987–1993) followed the commercial collapse of expert systems, as businesses realized these tools were not as versatile or reliable as initially promised.

Several factors contributed to these setbacks, including insufficient computing power, overhyped media portrayals, and a mismatch between academic AI goals and real-world business needs. Despite the decline, this period was critical for advancing AI theories and infrastructure, and it highlighted the need for systems that could learn from data rather than rely solely on predefined logic.

History of Artificial Intelligence: Rise of Machine Learning and Neural Networks (1990s–2000s)

By the 1990s, the limitations of symbolic AI prompted a major shift toward statistical methods, giving rise to machine learning (ML) as the dominant approach. Unlike symbolic systems, machine learning models learn from data, allowing them to adapt and improve without being explicitly programmed for every scenario.

At the core of this movement was the resurgence of neural networks, computational models inspired by the human brain. Although the concept had been introduced decades earlier, it was the refinement of the backpropagation algorithm in the late 1980s and early 1990s that made training deep neural networks more feasible. Backpropagation enabled networks to adjust internal weights efficiently, improving performance through repeated exposure to data.

One of the most iconic milestones of this era was in 1997, when IBM’s Deep Blue, a chess-playing AI, defeated reigning world chess champion Garry Kasparov. This historic moment demonstrated that AI could match—and even surpass—human expertise in complex tasks previously thought to require intuition and strategy.

This period also saw progress in computer vision and speech recognition as researchers began applying neural networks to recognize patterns in images and spoken language. Though results were still limited by hardware constraints, the foundation was being laid for more advanced applications to come.

Crucially, the infrastructure supporting AI began to evolve. The explosion of digital data, driven by the internet and the proliferation of digital devices, provided machine learning systems with the raw material they needed to improve. Simultaneously, computing hardware became more powerful and accessible, with the advent of GPUs accelerating training processes.

Another major enabler was the rise of open-source libraries and increased collaboration within the research community. Tools like MATLAB and later Python-based frameworks such as SciKit-Learn and Tensor Flow made machine learning more accessible to developers and researchers alike, fueling rapid innovation and democratizing AI development.

History of Artificial Intelligence: Deep Learning and Modern AI Applications (2010s–Present)

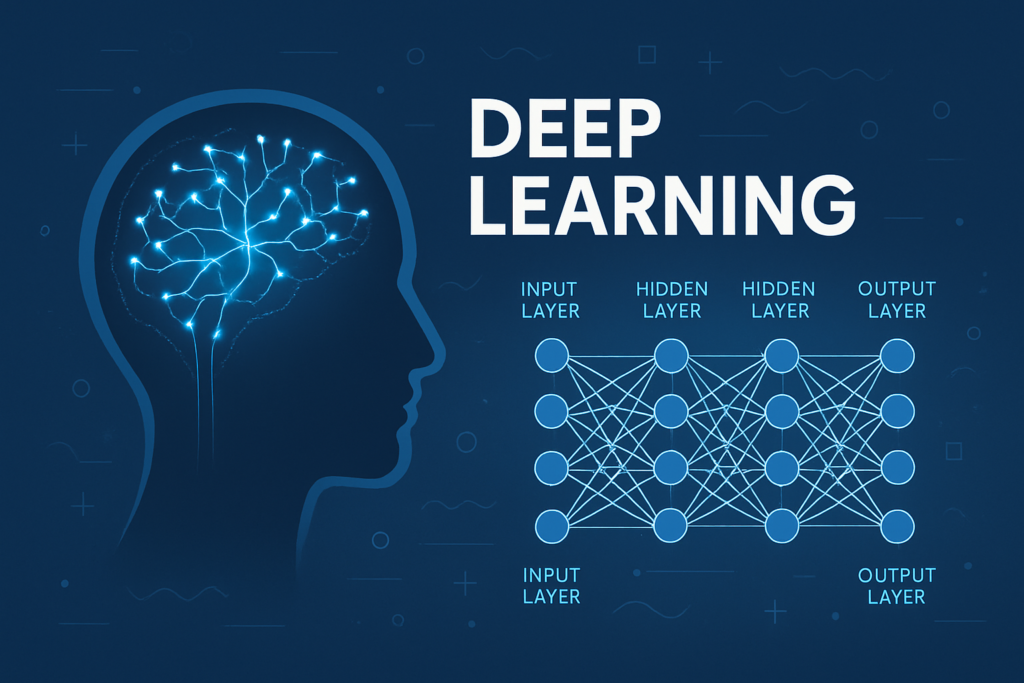

The 2010s marked a turning point for artificial intelligence, driven by dramatic advances in deep learning—a subfield of machine learning that uses layered neural networks to model complex patterns in data. These networks, particularly Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), began outperforming traditional models in tasks like image recognition and language processing.

CNNs, inspired by the structure of the visual cortex, revolutionized image and video processing, enabling breakthroughs in facial recognition, autonomous vehicles, and medical imaging. Simultaneously, RNNs, and later Transformer models, transformed how machines understood and generated human language. Transformers, introduced in 2017 with Google’s “Attention Is All You Need” paper, laid the groundwork for modern large language models (LLMs).

The impact of these technologies soon became visible in consumer applications. In 2011, Apple launched Siri, the first voice assistant to gain mainstream adoption. Google Assistant and Amazon Alexa followed, offering smart voice interfaces powered by natural language processing (NLP).

One of the most celebrated deep learning milestones came in 2016 when DeepMind’s AlphaGo defeated the Go world champion, Lee Sedol. Go, a complex strategy game with more possible board configurations than atoms in the universe, had long been considered a significant challenge for AI. AlphaGo’s success demonstrated the power of deep reinforcement learning and marked a major leap in machine decision-making capabilities.

The rise of Generative AI and Large Language Models (LLMs) further reshaped the field. OpenAI’s GPT series, culminating in ChatGPT in the 2020s, showcased the ability of AI to generate coherent, human-like text across a wide range of domains—from answering questions and writing code to composing essays and translating languages. These models are now integrated into tools for customer service, content creation, education, marketing, and software development.

Despite rapid progress, the current era is not without challenges. Concerns about ethics, algorithmic bias, data privacy, and AI regulation have grown alongside technical achievements. The debate over Artificial General Intelligence (AGI)—machines that can perform any intellectual task a human can—continues, with most experts recognizing that today’s systems remain narrow AI, excelling only in specific domains.

Nonetheless, AI is becoming deeply embedded in daily life, influencing healthcare diagnostics, financial modeling, targeted advertising, e-learning platforms, and beyond. As capabilities grow, so too does the need for thoughtful governance to ensure AI benefits society as a whole.

History of Artificial Intelligence: Pioneers and Key Figures in AI History

The history of artificial intelligence is shaped by the vision and innovation of several key pioneers whose foundational work laid the groundwork for the field as we know it today.

Alan Turing, often referred to as the father of modern computing, developed the theoretical basis for machine intelligence. In his seminal 1950 paper “Computing Machinery and Intelligence,” he posed the question, “Can machines think?” and introduced the Turing Test—a method for evaluating a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human.

John McCarthy was instrumental in formalizing AI as a scientific discipline. He coined the term “artificial intelligence” and organized the 1956 Dartmouth Conference, which marked the field’s official inception. McCarthy also developed LISP, a programming language that became essential for early AI research.

Marvin Minsky, a co-founder of MIT’s AI Lab, made significant contributions to understanding human cognition, perception, and machine learning. He was also deeply involved in philosophical discussions around AI’s potential and limitations.

Herbert Simon and Allen Newell played a crucial role in the early days of AI by creating the Logic Theorist, the first program capable of mimicking human problem-solving. They also developed the General Problem Solver (GPS), a foundational model for simulating reasoning.

Arthur Samuel, another key figure, is credited with coining the term “machine learning.” He created one of the first self-learning programs: a checkers-playing system that improved by playing games against itself.

In more recent history, Geoffrey Hinton, Yann LeCun, and Yoshua Bengio—often called the “Godfathers of Deep Learning”—pioneered the techniques that underpin today’s neural networks, making deep learning the dominant force in modern AI.

These individuals collectively transformed AI from a philosophical concept into a powerful technological reality.

History of Artificial Intelligence: Conclusion

The history of artificial intelligence has unfolded through several transformative phases—beginning with philosophical speculation and early theories, evolving into symbolic reasoning and expert systems, and eventually giving rise to the data-driven, learning-based systems we see today. From the foundational ideas of Alan Turing and John McCarthy to the deep learning revolution led by pioneers like Geoffrey Hinton, AI has grown from a theoretical pursuit into a force shaping nearly every aspect of modern life.

What began as simple rule-based systems has matured into complex algorithms capable of perception, reasoning, and even creativity. Today’s AI powers voice assistants, recommends content, automates customer service, supports medical diagnoses, and helps drive cars—integrating deeply into our personal and professional lives.

Looking ahead, the field continues to grapple with profound questions: Will we achieve Artificial General Intelligence (AGI)? How can we ensure AI systems are ethical, fair, and transparent? Future progress will require not just technical innovation but global collaboration, inclusive policymaking, and a commitment to using AI for the common good. As history has shown, the possibilities are vast—and the journey is far from over.

History of Artificial Intelligence: FAQ Section

“AI History FAQs”

- Who coined the term “artificial intelligence”?

John McCarthy coined the term in 1956 at the Dartmouth Conference. - What caused the AI Winter?

Overpromised results, limited hardware, and poor real-world performance led to reduced funding and interest in the 1970s and late 1980s. - What is the Turing Test?

A test proposed by Alan Turing to determine if a machine can mimic human responses well enough to be indistinguishable from a person. - What’s the difference between symbolic AI and machine learning?

Symbolic AI relies on hardcoded rules; ML uses data to train models that learn patterns automatically. When did AI become popular?

AI started gaining mainstream popularity in the 2010s, especially with the launch of Apple’s Siri in 2011, which brought AI into everyday life through smartphones. It became massively popular in the 2020s with the rise of generative AI tools like ChatGPT, DALL·E, and Midjourney, which made AI more accessible and useful for the general public.

Who invented artificial intelligence?

The concept of AI was formalized in 1956 at the Dartmouth Conference, where the term “Artificial Intelligence” was coined.

Key inventors and pioneers include:John McCarthy – Known as the “Father of AI”, he coined the term “Artificial Intelligence.”

Marvin Minsky, Allen Newell, and Herbert A. Simon – Early researchers who developed foundational AI programs like the Logic Theorist.

- Who started AI in India?

As part of India’s Fifth Generation Computer Systems (FGCS) research program, the Department of Electronics, with support from the United Nations Development Programme (UNDP), launched the Knowledge-Based Computer Systems (KBCS) project in 1986. This marked the beginning of India’s first major AI research initiative.

How is AI used in history?

AI is being used in the field of history to better understand, analyze, and interpret historical data. One example is the development of MapReader, a computer vision tool designed for the meaningful exploration and processing of historical maps.

This is one of several initiatives that leverage the new possibilities offered by Artificial Intelligence (AI) in historical research, including:

- Digitization and translation of ancient texts

- Analysis of large historical datasets

- Automatic generation of timelines and event recognition

- Tracking cultural and linguistic changes over time

AI is transforming how we study history, enabling researchers to explore the past with greater depth and precision.

iSoftReview is a trusted review platform offering insights, comparisons, and ratings for software, apps, and digital tools worldwide.

History of Artificial Intelligence Comparison Table: Symbolic AI vs ML vs Deep Learning

Feature | Symbolic AI | Machine Learning | Deep Learning |

Approach | Rule-based logic | Statistical models | Multi-layered neural networks |

Data Dependency | Low | High | Very high |

Learning Capability | None | Learns from data | Learns from massive data sets |

Interpretability | High | Medium | Low (black box) |

Examples | MYCIN, SHRDLU | Decision Trees, SVMs | CNNs, GPT, AlphaGo |

Read More

- Deep Learning Explained (internal article)

- What Are Neural Networks?

- Top Modern AI Tools for 2025

- What Is ChatGPT, and How Does It Work

- History of artificial neural networks

- History of knowledge representation and reasoning

- History of natural language processing

- Outline of artificial intelligence

- Progress in artificial intelligence

- Timeline of artificial intelligence

- Timeline of machine learning