Contents

- What Is Artificial Intelligence (AI)? A Complete Beginner’s Guide to Understanding How It Works, Its Types, Benefits, and Real-World Applications

- History of AI: Milestones and Breakthroughs

- Technical Foundations of Artificial Intelligence (AI)

- Applications of Artificial Intelligence (AI) Across Industries

- Ethical Considerations and Risks of Artificial Intelligence (AI)

- Recent Advancements in Artificial Intelligence (AI)

- The Future of Artificial Intelligence (AI) – Trends, Opportunities, and Challenges

What Is Artificial Intelligence (AI)? A Complete Beginner’s Guide to Understanding How It Works, Its Types, Benefits, and Real-World Applications

Artificial Intelligence (AI) is the field of computer science focused on creating machines that exhibit human-like intelligence. In simple terms, AI refers to computer systems that can perform complex tasks normally requiring human reasoning or decision-making. These tasks include learning from experience, understanding natural language, recognizing images, and even creative problem-solving. In practice, AI covers a wide range of techniques and approaches. A common view (illustrated by NASA) is that machine learning (ML) is a subset of AI, and deep learning (DL) is a further subset of ML. In other words, deep learning ⊆ machine learning ⊆ AI.

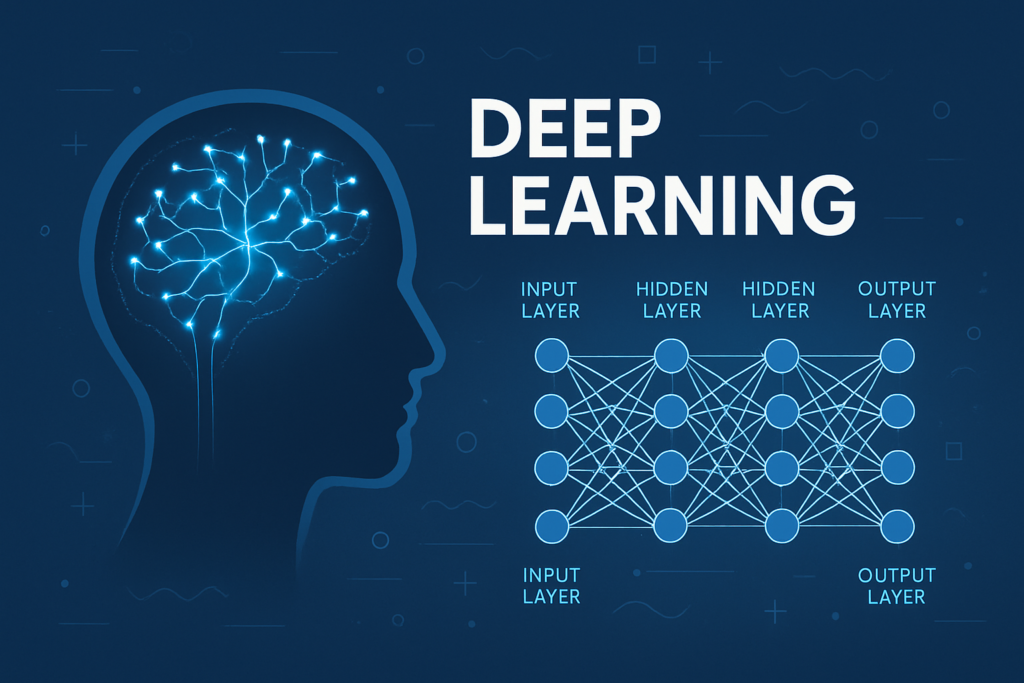

AI systems typically improve their performance by learning from data. Algorithms are trained on large datasets to find patterns or make predictions. For example, supervised learning uses labeled data (input-output pairs) to train models for classification or regression. Unsupervised learning finds structure in unlabeled data (e.g., clustering), while reinforcement learning trains an AI agent to make decisions by trial-and-error feedback (reward/punishment). A key breakthrough in recent years has been deep learning, which uses multilayer artificial neural networks to learn complex relationships. Deep learning networks (often deep neural networks) have many hidden layers and can automatically extract features from raw data. For example, a convolutional neural network (CNN) might learn to recognize objects in images, and a recurrent neural network (RNN) could process sequential data like speech or text.

AI also encompasses other concepts like natural language processing (NLP) (understanding and generating human language) and computer vision (interpreting images and video). Specialized AI tools include expert systems (rule-based programs), genetic algorithms (optimizing solutions), and fuzzy logic systems. In practice, most modern AI refers to machine learning and especially deep learning. As one expert puts it, “AI tools…use data and algorithms to train computers to make classifications, generate predictions, or uncover similarities or trends across large datasets”.

- AI (Artificial Intelligence): Computer systems that mimic human cognitive tasks – e.g., reasoning, decision-making, perception, and planning. There is no single definition, but broadly, AI means machines that perform tasks “requiring human-like perception, cognition, planning, learning, communication, or physical action”.

- Machine Learning (ML): A subset of AI where algorithms learn from data. ML involves training models to make predictions or decisions based on data without explicit programming. For example, a model might learn to classify emails as “spam” or “not spam” by analyzing many examples.

- Deep Learning (DL): A subset of ML that uses deep (multi-layer) neural networks. Deep learning mimics the brain with layers of interconnected “neurons” and is particularly good at processing unstructured data like images or language. Modern AI advances (e.g., image recognition, voice assistants) often rely on deep learning.

- Neural Networks: Computational models inspired by biological brains. A neural network consists of interconnected nodes (neurons) arranged in layers; each neuron processes input and passes signals to the next layer. As one source notes, “Neural networks are modeled after the human brain’s structure and function… [and] process and analyze complex data”. Large-scale “deep” networks (hundreds of layers) underlie state-of-the-art AI.

- Types of ML: Supervised learning uses labeled data to teach the model (e.g., email spam detection). Unsupervised learning finds patterns in unlabeled data (e.g,. grouping customers by behavior). Reinforcement learning lets an AI agent learn by trial and error (receiving rewards or penalties) – famously used in game-playing AI (see below).

In common usage, AI often refers specifically to narrow (weak) AI, which is AI designed for a particular task. All practical AI today is narrow: it works well for its specific purpose but cannot generalize beyond that. For example, a voice assistant like Siri or Alexa excels at understanding speech and simple commands, but can’t learn unrelated tasks on its own. As IBM explains, “Artificial Narrow Intelligence… is the only type of AI that exists today… It can be trained to perform a single or narrow task, often far faster and better than a human mind can… but it can’t perform outside of its defined task”. We already see narrow AI in tools like web search, image tagging, fraud detection, and recommendation engines.

In contrast, Artificial General Intelligence (AGI) – an AI that has human-like general reasoning capabilities – remains theoretical. AGI would “learn and perform any intellectual task that a human being can” without retraining. Finally, Artificial Superintelligence (machines exceeding human intelligence in all respects) is purely speculative at this stage. For now, “the narrow AI we have today can outperform humans only on its specific narrow tasks”.

In practical terms, AI, ML, and DL are often visualized as nested fields. For example, NASA illustrates AI as a large circle containing a smaller “Machine Learning” circle, which itself contains an even smaller “Deep Learning” circle. In other words, every deep learning system is a machine learning system, and every machine learning system is a form of AI. Deep learning’s multi-layer neural networks provide most of the recent breakthroughs in AI. These networks train on huge datasets (see next section) and can automatically extract features, enabling tasks like image recognition, speech understanding, and natural language generation.

History of AI: Milestones and Breakthroughs

AI has a long History of Artificial Intelligence, with many peaks and valleys. Its historical development can be divided into eras:

- 1940s–50s: Foundational Ideas. In 1950, Alan Turing published “Computing Machinery and Intelligence,” introducing the idea of a Turing Test for machine intelligence. This raised the fundamental question, “Can machines think?” In 1956, John McCarthy, Marvin Minsky, and others organized the Dartmouth workshop, coining the term “Artificial Intelligence” and setting the field’s agenda. In 1958, Frank Rosenblatt built the perceptron, an early electronic neural network that could learn simple tasks. These early programs (like basic classifiers and games) showed promise but were limited by hardware and data constraints.

- 1960s–70s: Symbolic AI and First AI Winter. During the 1960s, AI researchers explored symbolic AI and rule-based systems. For example, the ELIZA chatbot (1966) mimicked conversation, and early expert systems like DENDRAL (1965) and MYCIN applied AI to scientific and medical problems. However, the optimism of the early era eventually collided with reality. By the late 1960s and 1970s, progress stalled due to limitations of simple neural nets (as described in Minsky & Papert’s Perceptrons and unmet expectations. Governments cut AI funding in what became known as the first “AI winter” (the term itself was coined in 1984 when researchers warned of a coming collapse.

- 1980s–90s: Expert Systems and Renewed Interest. AI revived in the 1980s with commercial expert systems (LISP machines, rule-based diagnostics) and more computing power. However, another boom-bust occurred in the late 1980s with the second AI winter as rule-based systems struggled. By the 1990s, new approaches emerged. In 1997, IBM’s Deep Blue made history by defeating world chess champion Garry Kasparov under tournament conditions. This was a landmark moment showing that computers could master complex games.

- 2000s: Big Data and Machine Learning. The 2000s saw the rise of big data, faster processors, and better algorithms. In 2011, IBM’s Watson won the game show Jeopardy! using natural language processing and machine learning. In 2012, Geoffrey Hinton and colleagues revolutionized AI with AlexNet, a deep convolutional neural network that won the ImageNet image-recognition competition by a wide margin. This “deep learning breakthrough” demonstrated that multi-layer neural networks could dramatically outperform previous methods on real-world tasks.

- 2010s–Present: Deep Learning Era. Since 2012, AI has accelerated. Google’s AlphaGo used deep reinforcement learning to defeat a top human Go champion in 2016, a feat once thought decades away. AI-driven products entered everyday life: voice assistants like Siri (2011) and Google Assistant, recommendation engines on Netflix and Amazon, and advanced image recognition on smartphones. Breakthroughs in deep learning produced Generative Adversarial Networks (GANs) (2014) for generating realistic images, and the Transformer architecture (2017) for natural language (e.g., the paper “Attention Is All You Need”, which underpins modern language AI).

- 2020s: Large Language Models and Generative AI. In recent years, the rise of enormous neural models has captured headlines. In 2020, OpenAI released GPT-3, a language model with 175 billion parameters capable of generating fluent text. In 2022, ChatGPT (based on GPT-3.5) made conversational AI accessible to the public, reaching millions of users quickly. In 2023, OpenAI and others introduced GPT-4 (multimodal LLM), Google unveiled its Bard/Gemini chatbot, and generative image models (DALL·E, Midjourney, Stable Diffusion) became mainstream. These events mark the latest leap in AI’s history, demonstrating AI’s new creative and interactive capabilities.

Major Milestones in AI History:

- 1950: Turing Test proposed.

- 1956: “Artificial Intelligence” term coined at Dartmouth Conference.

- 1966: ELIZA, one of the first chatbots, is created.

- 1969–1974: Early neural nets limitations revealed (Perceptron critique; first AI winter.

- 1997: IBM’s Deep Blue beats Chess champion Kasparov.

- 2011: IBM Watson wins Jeopardy!.

- 2012: AlexNet CNN wins ImageNet; deep learning boom begins.

- 2016: DeepMind’s AlphaGo beats Go champion.

- 2020: OpenAI releases GPT-3 (175B LLM).

- 2022: ChatGPT popularizes conversational AI.

- 2023: GPT-4 and other multimodal LLMs and generative AI tools released.

This history shows how AI progressed from symbolic logic and simple learning models to today’s powerful neural networks and large language models. Each breakthrough depended on advances in algorithms, data, and computing power (discussed below).

Technical Foundations of Artificial Intelligence (AI)

Building and running AI systems requires several key technical components:

- Algorithms and Models: AI relies on algorithms that learn from data. In supervised learning, models (like decision trees, support vector machines, or neural networks) are trained on labeled datasets. In unsupervised learning, algorithms find hidden structures (for example, clustering customers by purchase behavior). Reinforcement learning algorithms enable agents to learn by reward signals, famously used by AlphaGo to master Go. Over the last decade, neural networks (especially deep networks) have dominated state-of-the-art AI. Neural models like Convolutional Neural Networks (CNNs) and Transformers can automatically extract features from data. As IBM notes, “Neural networks… consist of interconnected layers of nodes (analogous to neurons) that work together to process and analyze complex data”. Modern systems use variations of these networks for tasks like vision (CNNs), language (transformers/RNNs), and more.

- Data (Training Data): Large-scale AI requires large, high-quality datasets. Training an AI model means feeding it massive amounts of data so it can learn patterns. As one source explains, training algorithms need “massive amounts of data that capture the full range of incoming data”. For instance, image-recognition networks are trained on millions of labeled photos. Data must be cleaned and labeled properly; biased or poor-quality data leads to poor AI performance (see Ethics section). In summary, “AI uses data to make predictions,” and without sufficient representative data, models will not generalize well.

- Hardware (Computing Power): Modern AI, especially deep learning, demands powerful hardware. Training deep neural networks involves billions of calculations. GPUs (Graphics Processing Units) and specialized chips (like Google’s TPUs) are crucial because they can perform many parallel computations. As IBM puts it, “High-performance [GPUs] are ideal because they can handle a large volume of calculations…[necessary] to train deep algorithms through deep learning”. In practice, many AI labs run models on GPU clusters or cloud services. Distributed computing and cloud platforms (AWS, Azure, Google Cloud) also play a big role. Edge AI (running models on devices like phones and IoT gadgets) is an emerging trend for applications needing low latency or privacy.

- Software and Frameworks: AI development is accelerated by mature software frameworks. Libraries like TensorFlow, PyTorch, Keras, and scikit-learn provide building blocks (pre-written layers, optimizers, etc.) so developers don’t start from scratch. These frameworks enable researchers and engineers to quickly prototype neural nets and ML models. One article notes that ML frameworks “are more than just tools; they are the foundational building blocks… [that] enable developers to craft sophisticated AI algorithms without starting from scratch”. In addition, higher-level platforms (e.g., AutoML tools) and APIs (like OpenAI’s API, Azure AI, Google AI Platform) help businesses deploy AI without deep expertise.

Key Technical Concepts (Summary):

- Supervised Learning: Training models on labeled data. Used for classification/regression tasks (e.g., image classification). Example algorithms: logistic regression, neural nets.

- Unsupervised Learning: Algorithms find patterns in unlabeled data (e.g., clustering, dimensionality reduction).

- Reinforcement Learning: AI agents learn via rewards/punishments through trial and error. Used in robotics, games (e.g., AlphaGo).

- Neural Networks: Computation graphs of neurons (nodes) and weighted connections. Deep networks (many layers) can learn complex features.

- Training Data: Labeled examples used to train models. Big, diverse datasets improve AI; poor data causes “garbage in, garbage out” problems.

- Compute Hardware: GPUs/TPUs accelerate training. Cloud computing provides scalable resources.

- AI Frameworks: Libraries (TensorFlow, PyTorch) offering pre-built functions and models. They enable rapid development and deployment of AI solutions.

Together, these foundations – algorithms, data, and compute – make modern AI possible. In the next section, we’ll explore how AI is applied across different real-world industries.

Applications of Artificial Intelligence (AI) Across Industries

AI is not just theoretical – it powers countless real-world applications. Below are some prominent industry examples, illustrating how AI technologies drive value:

Healthcare

AI is transforming healthcare in many ways:

- Medical Imaging & Diagnostics: Deep learning models analyze X-rays, MRIs, and CT scans with high accuracy. For example, Google researchers reported an AI system that outperformed human radiologists in detecting lung cancer from scans. AI also aids in spotting diabetic retinopathy, skin cancer, and more, often flagging diseases earlier.

- Drug Discovery & Biology: AI accelerates drug design by modeling complex biological processes. DeepMind’s AlphaFold uses AI to predict protein structures – a task vital for drug discovery. In 2020, AlphaFold achieved groundbreaking accuracy in the Critical Assessment of Protein Structure Prediction contest, potentially speeding up how quickly new medicines can be developed.

- Personalized Medicine: AI analyzes genetic and patient data to tailor treatments. For instance, predictive algorithms can suggest which chemotherapy drugs are most likely to work for a specific patient.

- Patient Monitoring & Virtual Care: Wearable devices and apps powered by AI track vital signs and even early symptoms (like detecting atrial fibrillation). Chatbots and virtual assistants help patients with basic triage, medication reminders, and mental health support (though these should augment, not replace, professional care).

Finance

AI has rapidly penetrated banking, insurance, and financial markets:

- Fraud Detection and Security: Machine learning models scan millions of transactions in real time, flagging anomalies that might indicate credit card fraud or money laundering. This reduces losses and false alarms.

- Trading and Investment: Quantitative funds use AI-driven algorithms to analyze market data and execute trades. Some high-frequency trading systems rely on ML to detect patterns faster than humans.

- Loan Underwriting and Credit Scoring: AI evaluates loan applicants by examining unconventional data (social, transactional) and improves risk assessment. This can speed approvals but also raises fairness questions (see Ethics).

- Document Analysis: Natural language processing (NLP) systems read legal and financial documents. A striking case study is JPMorgan’s COIN platform: a machine-learning tool that automates the review of commercial loan agreements. Reportedly, COiN reduced the lawyers’ workload by about 360,000 hours per year, dramatically cutting manual effort.

- Customer Service: Banks and insurers deploy AI chatbots and voicebots to answer customer queries 24/7, handle routine requests (e.g., balance inquiries), and personalize financial advice.

Marketing and Retail

Businesses use AI to better understand and serve customers:

- Personalization & Recommendations: Online retailers and streaming services (like Amazon and Netflix) rely on AI engines to recommend products or media. These systems analyze your past behavior to suggest items you’re likely to buy or enjoy, significantly boosting engagement and sales.

- Advertising Optimization: AI helps target online ads to the right audience. Machine learning models adjust ad bids in real time, optimizing spend for clicks or conversions.

- Customer Insights: By analyzing customer data (browsing history, purchase records, social media), AI identifies trends and customer segments. Marketers use these insights to craft targeted campaigns.

- Chatbots and Virtual Assistants: Many companies use AI-powered chatbots on websites and apps to answer FAQs, process orders, and improve user experience.

Manufacturing and Industry

AI drives efficiency and innovation in factories and supply chains:

- Robotics and Automation: Smart robots powered by AI perform tasks on assembly lines – welding, packaging, sorting – often collaborating safely with human workers. AI vision systems check products for defects at high speed.

- Predictive Maintenance: Sensors on machines generate data (vibrations, temperature, pressure). AI models analyze this data to predict equipment failures before they happen. For example, AI-driven maintenance systems can alert operators to replace a worn bearing, avoiding costly downtime. As a result, manufacturers see higher productivity and lower costs. (Global equipment makers like Siemens and GE offer such AI maintenance platforms.)

- Supply Chain Optimization: AI helps forecast demand, optimize inventory, and route shipments. By analyzing weather, market trends, and logistical data, AI can improve delivery times and reduce waste.

Other Notable Areas

- Transportation: Self-driving cars and trucks (by companies like Tesla, Waymo, and traditional automakers) are under development using AI perception and planning. Drones and robotic delivery vehicles also rely on AI for navigation.

- Agriculture: AI analyzes satellite and sensor data to optimize watering, fertilization, and harvesting. Crop monitoring drones use computer vision to detect pests or diseases.

- Education: AI tutors and adaptive learning platforms personalize education, adjusting difficulty based on student performance. Automated grading and plagiarism detection tools also assist educators.

Across all industries, AI often appears behind the scenes as predictive analytics or decision-support tools. For example, supply chains for retail and manufacturing use AI to predict consumer demand and optimize operations, even if customers never see “AI” explicitly. In healthcare, a doctor’s diagnosis might be aided by an AI tool that highlights suspicious X-ray regions. These case studies show that AI can improve accuracy, speed, and cost-effectiveness. However, as we discuss next, the use of AI also raises important ethical questions.

Ethical Considerations and Risks of Artificial Intelligence (AI)

AI’s power comes with serious ethical and societal challenges. Key concerns include:

- Bias and Fairness: AI systems learn from data that often reflects human biases. If training data is imbalanced or prejudiced, AI can inherit and even amplify those biases. For example, an AI trained on historical hiring data may discriminate against certain groups, or a predictive policing system may unfairly target communities. Ensuring that AI decisions are fair and nondiscriminatory is a critical concern. Researchers and regulators stress the need for diverse training data and fairness audits.

- Privacy: Many AI applications require large amounts of personal data. For instance, voice assistants listen to users’ speech, and health AI might access sensitive medical records. The ethical challenge is how to collect, use, and protect this data. Improper use can violate privacy or leak sensitive information. Strict data governance, anonymization, and transparent data practices are essential to maintain trust.

- Transparency and Accountability: Complex AI models (like deep neural networks) are often “black boxes” – their internal decision processes are hard even for experts to interpret. This opacity raises questions: Why did the AI make that prediction? Who is responsible if it’s wrong? For safety-critical uses (medicine, finance, law), regulators and users demand explainability. Opaque AI can erode trust if errors cannot be understood or corrected. Thus, there is a push for “explainable AI” techniques and standards that let humans audit model behavior.

- Autonomy and Control: As AI systems become more autonomous (e.g., self-driving cars, drones, industrial robots), ensuring that humans remain in control is vital. There are risks if an AI acts unpredictably or fails. For instance, an autonomous vehicle misinterpreting a situation could cause accidents. Experts warn that improper oversight of powerful AI could have severe consequences. Stephen Hawking famously cautioned that “unless we learn how to prepare for… the potential risks, AI could be the worst event in the history of our civilization”. Whether or not one agrees with such dire predictions, it underscores the need for careful safety research and human-AI collaboration standards.

- Job Displacement: Automation from AI can replace some human jobs, raising economic and social issues. Routine tasks in manufacturing, administration, or even complex professions (like drafting legal documents) can be automated. This might increase productivity but also cause job displacement and widen economic inequality. Studies estimate that many industries will see certain roles vanish or transform. The ethical imperative is to manage this transition through job retraining, education, and new job creation, so workers aren’t left behind.

- Security and Misuse: AI is a dual-use technology. While it brings benefits, it can also be misused. For example, deepfake technologies (AI-generated fake images or videos) can spread misinformation or defraud individuals. AI-driven cybersecurity attacks are also a concern (attackers using AI to craft phishing or to break systems). Defense applications pose grave questions: Autonomous weapons (“killer robots”) that select targets without human input raise legal and moral red flags. Governments and societies must consider regulations to prevent dangerous misuse.

In response to these issues, policymakers and researchers emphasize ethics. For instance, the European Parliament has passed the EU AI Act (2023), the world’s first broad AI regulation, which bans “high-risk” AI uses (like untamed facial recognition) and mandates transparency for general-purpose models. Some U.S. states have also begun passing AI laws. International organizations (e.g., UNESCO, IEEE) have published AI ethics guidelines. In practice, many companies now have internal AI ethics boards.

Overall, the AI debate balances enormous potential against real risks. As USC scholars summarize: AI’s ethical challenges – bias, privacy, transparency, control, and more – require a multidisciplinary approach involving technologists, ethicists, and regulators. Failing to address these considerations could lead to mistrust or harm, slowing down the positive benefits of AI.

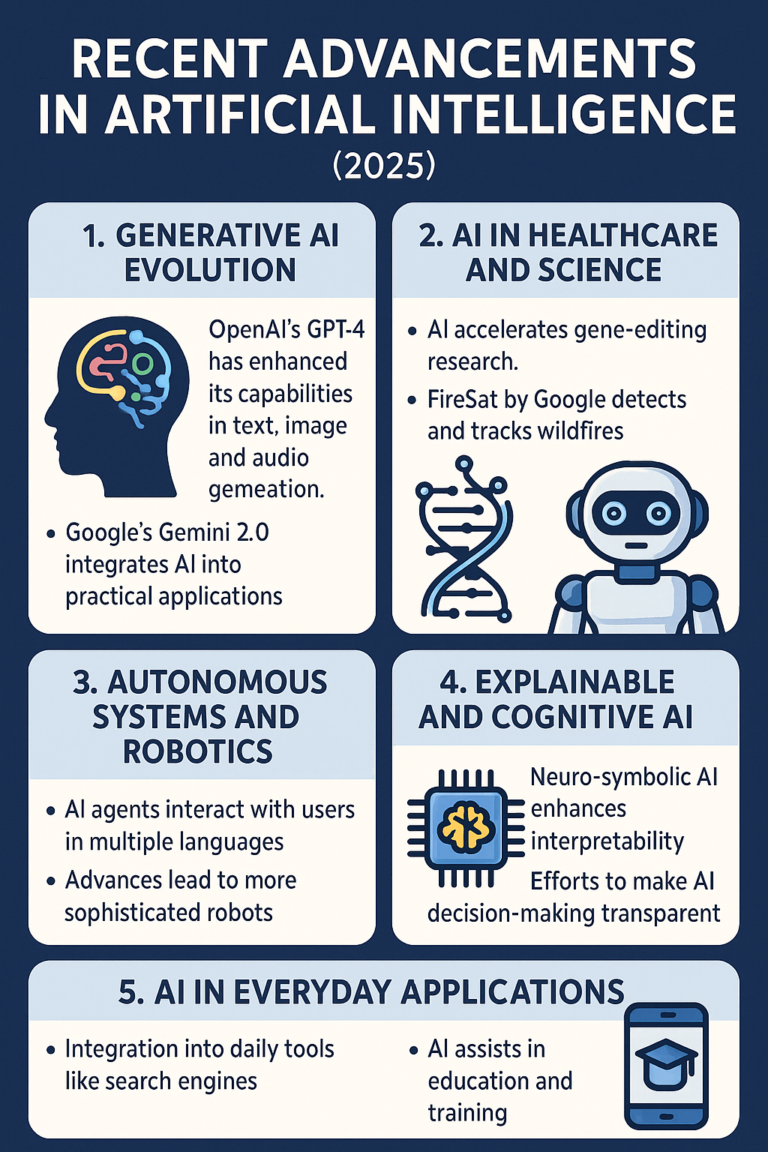

Recent Advancements in Artificial Intelligence (AI)

The past few years have seen explosive advances in AI, especially in generative models and autonomous systems:

- Generative AI (GenAI): These models generate new content. For example, large language models (LLMs) like OpenAI’s GPT series and Google’s PaLM can write essays, answer questions, or even generate code. Image models (e.g., DALL·E, Stable Diffusion, Midjourney) can create realistic art or images from text prompts. Technically, generative AI uses deep learning to model data distributions and produce novel outputs. As IBM notes, generative AI can create “original text, images, video, and other content”. In practice, tools like ChatGPT have made AI text generation widely accessible. These generative models often use Transformer architectures (introduced in 2017) that excel at handling sequences (like language). For instance, the transformer-based GPT-4 (released in 2023) has over 170 billion parameters and can process text and images. Generative AI is not limited to language and images – there are models generating music, 3D designs, even game levels. These advances mean AI can now assist in creative and design tasks, from drafting marketing copy to generating synthetic training data.

- Large Language Models (LLMs): These are a specific class of generative AI focused on text. An LLM is “a type of AI algorithm that uses deep learning techniques and massively large data sets to understand, summarize, generate, and predict new content”. Essentially, an LLM is a giant neural network trained on huge text corpora (books, websites, etc.). Because they have so many parameters (billions), they capture subtle patterns in language. LLMs power chatbots, translation systems, and writing assistants. Notably, OpenAI’s GPT-3 (175B parameters) and GPT-4, Google’s LaMDA/Bard, Meta’s LLaMA, and others are in this category. These models can perform surprisingly well at tasks they weren’t explicitly trained for (few-shot learning). For example, GPT-4 can write stories, solve math problems, and even write code by treating prompts as instructions.

- Autonomous and Embodied AI: Progress in AI hardware and algorithms has advanced robotics and autonomous agents. Self-driving cars (by Waymo, Tesla, etc.) use AI to fuse camera, lidar, and radar data for navigation. Autonomous drones and robots (such as Boston Dynamics’ Atlas) are learning to walk, run, and carry out complex maneuvers. In games, deep reinforcement learning (RL) continues to impress: Google’s AlphaZero learned to master chess and Go from scratch (after 2017) by playing against itself. RL is also used in automated scheduling, resource management, and even in finance (e.g., trading). These systems highlight how AI is moving beyond purely digital tasks to physical, real-world decision-making.

- Other Notable Technologies: Advanced AI models such as diffusion models (for image synthesis) and GANs (Generative Adversarial Networks) have matured. Multi-modal models, which handle text, images, and audio together (e.g., CLIP, GPT-4, Gato), are emerging. Tiny AI (run on phones or IoT devices) and federated learning (AI on distributed devices with privacy) are also recent trends. In short, breakthroughs in model architectures (transformers, attention mechanisms), scale (billions of parameters), and data (massive internet text) have together ushered in this era of powerful AI tools.

Recent AI research is advancing rapidly. For example, GPT-3 (2020) demonstrated powerful text generation, GPT-4 (2023) extended this to images, and open-source models like Stable Diffusion (2022) democratized image generation. The popularity of tools like ChatGPT (released Nov 2022) has made AI part of everyday conversation. In summary, the recent wave of AI is characterized by generative models and large-scale neural networks that can perform tasks previously thought to be uniquely human.

The Future of Artificial Intelligence (AI) – Trends, Opportunities, and Challenges

Looking ahead, AI is poised to grow even more influential, but with hurdles to overcome.

- Emerging Trends: Several exciting trends are on the horizon. Quantum computing may eventually accelerate AI by solving certain optimization problems faster (though practical quantum AI is still experimental). Edge AI – running AI models on smartphones, IoT sensors, and autonomous vehicles – will become more common as devices grow more powerful and 5G connectivity expands. Multi-agent systems, where many AI “agents” coordinate (in traffic management or robotics), are another research area. Brain-computer interfaces (e.g., Neuralink’s brain-chip work) hint at a future where AI interfaces directly with neural signals, enabling new ways to communicate or restore lost functions. We also expect AI to integrate with other advanced tech: for instance, AI-designed materials, advanced biotech, and climate science (using AI to model and mitigate climate change). Overall, AI Everywhere will continue: from smart home assistants that anticipate our needs, to AI companions for learning and entertainment.

- Opportunities: The potential benefits are vast. Analysts project huge economic impacts from AI. For example, one forecast predicts that generative AI alone could add $280 billion in software revenue by 2026. AI promises gains in virtually every sector: it could double the rate of innovation in drug discovery, optimize energy use to combat climate change, personalize education at scale, and automate tedious tasks so people focus on creative work. In manufacturing and agriculture, AI-driven efficiency could significantly increase productivity and reduce waste. In customer service, AI chatbots and recommendation systems can improve user experiences around the clock. Even creative industries benefit: AI tools can help artists, writers, and designers by suggesting ideas or generating prototypes.

- Challenges and Risks: Alongside opportunities, challenges loom. Key issues include:

- Ethical/Social Challenges: The concerns of bias, privacy, and job displacement (discussed above) will persist and possibly grow as AI spreads. Societies will need to manage workforce transitions and address digital divides (ensuring AI benefits are widely shared).

- Safety and Alignment: Ensuring that AI systems act by human values (the “alignment problem”) is crucial. For powerful AI, we need ways to guarantee they don’t take harmful shortcuts. Developing robust, explainable AI is an ongoing technical challenge.

- Regulation and Governance: Policymakers are still catching up. Striking the right balance between innovation and protection will be key. Recent laws (EU AI Act) set precedents, but a global consensus is hard. Over-regulation could stifle useful AI, while under-regulation could allow misuse.

- Resource and Environmental Impact: Training very large AI models requires enormous computational resources and energy. There is growing awareness of AI’s carbon footprint. Future AI research must consider sustainability (e.g. more efficient algorithms, green data centers).

- Technical Limitations: Current AI still lacks common sense and broad reasoning ability. Many tasks still require human intuition. Additionally, AI models can be fooled by adversarial inputs or fail in unexpected ways outside their training distribution. Making AI more robust and secure is an active area of research.

- Ethical/Social Challenges: The concerns of bias, privacy, and job displacement (discussed above) will persist and possibly grow as AI spreads. Societies will need to manage workforce transitions and address digital divides (ensuring AI benefits are widely shared).

Researchers, businesses, and governments are actively exploring solutions: explainable AI methods, AI safety research, new regulations, and education programs to reskill workers. In summary, the future of AI will likely involve a virtuous cycle of innovation and oversight. AI will open new frontiers (intelligent automation, autonomous exploration, global data analysis), but our society must guide its path carefully.

Looking Ahead: AI’s next phases may include achieving general-purpose AI assistants, AI-driven breakthroughs in science, and fully autonomous vehicles or robots operating in the world. For example, companies are investing in “AI copilots” for coding, writing, and data analysis. Meanwhile, frontier research in areas like neuromorphic computing and AI explainability may address current limitations. Ultimately, the goal is to harness AI’s opportunities (better health, cleaner environment, smarter education) while mitigating its risks (privacy loss, bias, social disruption).

In conclusion, Artificial Intelligence is a rapidly evolving field that blends computer science, data science, and domain expertise. We’ve seen it grow from simple experiments to pervasive technologies in healthcare, finance, manufacturing and beyond. By understanding AI’s basic concepts, its history, and its technical underpinnings, we can better appreciate its potential and navigate its challenges. This balanced perspective will be crucial as AI continues to reshape our world.